After becoming somewhat frustrated with Dynamo’s native CSV.ReadFromFile node, I decided to find a smarter way to parse a CSV file. Any and all commas will cause Dynamo to split data into a new column, which becomes problematic when you have commas within a cell.

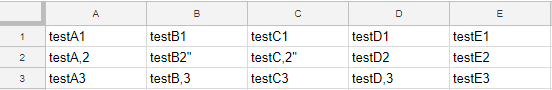

More specifically, the data I am using is downloaded as a CSV file from Google Sheets. By reading the file as text and instead parsing it with Python, the structure of the data is maintained. Above is the output from the native Dynamo node and below is what is returned from the Python script:

The Python script itself is very simple (taken in large part from this post on Stack Overflow and adapted to work with IronPython in Dynamo)

import sys

sys.path.append(“C:\Program Files (x86)\IronPython 2.7\Lib”)

import csv

import StringIO

myCSV = IN[0]

output =

source = StringIO.StringIO(myCSV)

csvOUT = csv.reader(source , dialect=csv.excel)

for line in csvOUT:

output.append(line)

OUT = output

EDIT: After looking at the output a bit more closely, neither solution seems to handle extra quotes very well. The item testB2" has two extra quotes in the native Dynamo output and one extra quote in Python output. Other than that, it works pretty well.

EDIT 2: The CSV dialect was actually incorrect and should instead be the csv.excel dialect. Otherwise, it parses the file incorrectly.