Very good days.

I would like your help.

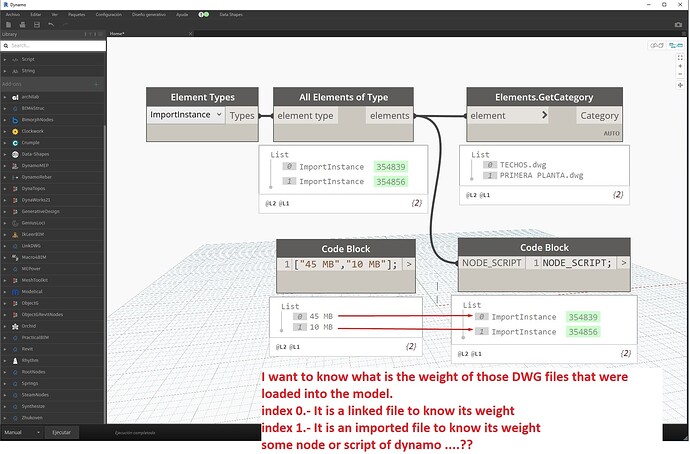

I am trying to get the weight of the DWG files by both importing and linking.

I would appreciate your support and recommendations…thank you very much.

Attached files for testing… thanks.

01_OBTENER EL PESO DE ARCHIVOS DWG.dyn (7.2 KB)

01_Get weight from dwg file.rvt (5.1 MB)

I updated your thread title to be “file size” instead of “weight” as that’s the proper term in English and should help with filtering/searching on the forum as well as getting you some more attention.

You’ll need to work back from the link to the file path and then get the actual file at that location in order to determine the file size.

1 Like

In the case of being a link it could work.

But in the case of being an imported file, the origin was eliminated and it only remained visible in the Revit model, already being inside Revit, how could I know that information…???

At that point I don’t think you really could. Why do you need file size, particularly for an object that is not stored as a file?

1 Like

Internally Revit must be registering the weight of those dwg files that were imported.

Maybe. But why would you assume it must? The RVT is the file at that point. The families loaded into the model are no longer RFAs so would you expect Revit to somehow track their file size as well? Everything loaded into a model does affect performance and contribute to the overall size, but I wouldn’t think that would be tracked individually somehow. Again, how would you define disk space when the object isn’t independently stored on disk?

Revit is not registering the file size of imports. All DWG links and imports result in completely different data, and as such there is effectively zero functional value in the file size.

Also, file size has minimal to no impact on performance.

What you mean is that if I import 1 million files, it will only weigh 1bm… if that were true, then because when importing files they make the Revit model slow

There’s no direct relationship between file size and performance. You can have a 50MB file that performs flawlessly and you can have a 50MB file that performs like crap. File size itself is one of the lesser contributing factors to performance. Especially in a database format like Revit, where there may be other internal parameters/properties/warnings/etc. that get tied to a loaded object like a DWG, there’s much more going on inside the model that contributes to that objects performance than the external file size.

What you mention is very interesting, as far as I understand the warnings that are given in the construction of the bim model greatly influence the functionality and size of the REVIT file, I would like to know how to know the weight and the problem that a warning has within the model … ???

Warnings can, at a certain point, be a large influence on model performance (not sure about size). If you’re wanting to look at warnings that’s a separate issue from file size.

There are some nodes (I think from archi-lab?) that deal with warnings that would be a good starting point. You could also go through the API but there’s not much in the way of managing warnings (last time I checked anyway).

Warnings themselves also don’t have a direct relationship with file size or performance. You’d be able to look at your model as a whole, with all its warnings, but you’d still have to make an educated decision on how much of an impact those warnings are making on your model.

So this is getting a bit close to off topic… We might want to edit the title later if we keep on this path (if the mood strikes me I might just take that action on my own). But since we’re on the subject, here goes nothing.

First up: file performance is not a science, but an art. What performs well for one team can be crippling to another because of the uses and workflows of each team. There are some actions and items you can identify which have significant performance impacts, so it’s worth discussing. I’ll do a quick outline of what I’ve seen in my experience in no particular order, but you should do your own review in the context of your team(s).

1) Quality of data. It should go without saying, but garbage in will result in garbage out. In the context of Revit this can mean bad imports, massive amounts of redundant info, and other items which generally just take up compute space without providing any direct benefit. My personal guideline is: If you don’t need it, don’t put it in the model. If you do need it make sure it’s natively constructed (not imported) and built in the context of the model you’re working in. Winds up that it’s much more difficult to make native content which performs poorly when compared to imports - like multiple orders of magnitude harder. If you absolutely must with data you didn’t create (ie: a survey) then it’s on you to confirm that data’s quality before bringing it in - check the geometric extents, layers, and the like before you import a DWG, and if something isn’t good then modify it before you bring it in.

2) Size of utilized external documents which are not yet cached. The cached bit is specific to cloud projects, but this comes up enough that it gets it’s own item in the list. Every link has to be loaded into memory to display secondary data. If you have 5gb of linked files which are linked via desktop connector, then you’re going to have to download 5gb of data each time they update. That will take awhile based entirely on how the internet works. Either don’t link them all or reduce the frequency of updates.

3) Warning count and severity. Every element in your model is another thing which has to be computed. That doesn’t come as a shock, but what might be surprising is that each warning is an element. More to the point though, every time an element has to be ‘recalculated’ your system is going to have to do some processing, and warnings are evaluated pretty much constantly. My personal guideline for warning count is to target 0. It’s hard, but things will perform better. That said some warnings have more impact than others - lines off axis are a common issue which ought to be addressed first as anything which interacts with that off axis object will suffer a similar delay, while duplicate room numbers impacts a lot less. Which is most important is best left to you - @Konrad_K_Sobon has an excellent blog post on warnings here: https://archi-lab.net/digging-through-revit-warnings-to-find-meaning/. I’d make that required reading for every seasoned Revit user if it were up to me.

4) Complexity of parametric constraints. Parametric constraints are SUPER helpful, but remember that family 2 can’t have it’s geometry drawn until family 3’s references are calculated, which requires family 3 to have it’s geometry drawn, which requires the wall to be drawn…Expand this over a list of 10,000 elements and suddenly you’re facing a massive set of calculations to draw one family… Did I mention that since family 2 cuts an opening in the wall we can’t completely draw that yet either? Fortunately Revit is extremely good at running such calculations. However you can still surpass the level at which that constraint is efficient and instead it’s suddenly an anchor around your project’s load time. In some cases it may make sense to draw a reference plane, or even remove constraints once the design starts to solidify.

5) Complexity of geometry drawn. This is consistent in every program I have ever seen, and will likely never go away. Multiple layered gradients at a high DPI in photoshop will bring things to a halt, as will multiple layered hatches at a high enough density in AutoCAD, as will multiple complex geometries and fills at a given density in Revit. Stuff like shadow calculations and other tweaks which can make drawings look WAY better can slow things down a TON while you’re doing non-presentation work. As such I recommend keeping a separate view for each type of drawing, in addition to working views for the project team so they can just display the data they need to make the design decisions necessary before they look into the documentation and presentation aspects. Note that these views will be purged often (see #8).

6) Size of view and displayed element count. Geometry is secondary data in Revit. This means that everything you see has to be calculated on demand; it’s not stored in the .rvt itself. The more you display (and the more complex stuff you display - see #5) the slower things will go. 10k mullions consisting of a rectangle is one thing, but 10k mullions consisting of 500 line segments to show the detail of the profile at the glazing insert is another. Keep it simple by using detail levels when you’re going to be printing at 1:100 scale anyway.

7) Quantity of synced changes. Often times the slowest part of the day is opening and syncing. This has become more apparent to users as more companies shift to cloud projects, and like the external references each of these changes have to be3 downloaded and incorporated into your local copy before your stuff can be added to the central… Revit’s personal accelerator will actually ‘pre-fetch’ up to 5gb of updates in advance, however your system won’t incorporate those until you sync. So that model you opened last Friday and didn’t sync before you signed out and now has a half week of your coworker’s changes? Yeah that’s going to be a slow sync. Worse yet all of your changes from that Friday now have to be pushed to everyone’s local, which will be a larger number even though you hardly touched stuff because you had to incorporate so much at once. My guideline on this is to have everyone sync once every 15 minutes or per transaction for larger efforts like group edits/family loading.

8) General file bloat. When is the last time you purged your files completely? That working view with 1000’s of dimensions that was used a LOT in schematic but hasn’t been touched since? That’s 1000’s of unused data points which are slowing you down with no benefit; we can always bring them back from an archive if needed anyway - give it the boot. My guideline on this is to purge completely and remove all working views which are more than a week old (with a exception around actively utilized and properly filed working views).

1 Like